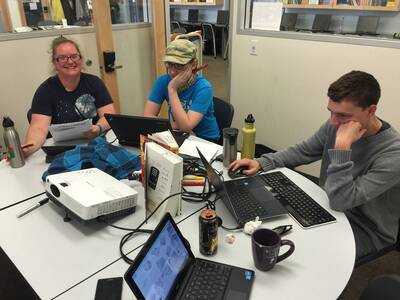

Cabrillo College @ Hack UCSC 2015

Four teams composed exclusively of Cabrillo College students participated in Hack UCSC 2015, and 3 of 4 made it to the semifinals round. In my opinion, most of Cabrillo's projects were at or near the top with regard to creativity and social utility. I was very impressed by Cabrillo students' work and thought. Without exaggeration, I would definitely have placed most of our teams in the finals for the Tech Cares category. I am extremely proud of them, the work they did, and the camaraderie and solidarity that pervaded our group. It really was a great time.

Press:

cccPlan

cccPlan aggregates information from assist.org and individual community colleges to present a broader and more informative list of transfer-level courses between colleges.

Team members: Chad Benson, Jade Keller, William Ritson, et al

GitHub repositories and other sites

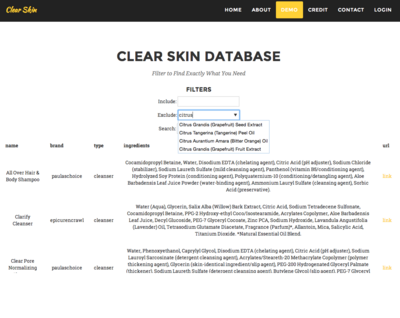

Clear Skin

We are building a database for skincare products that allows the user to search for products by ingredients. We utilized Kimono Lab's chrome extension to build custom API's used to scrape websites for ingredients. We used SQLite for the database itself, PHP on the server, and AJAX.

Team members: Christopher Chen, Francisco Piva, Nikolas Payne, Bruno Hernandez

GitHub repositories and other sites

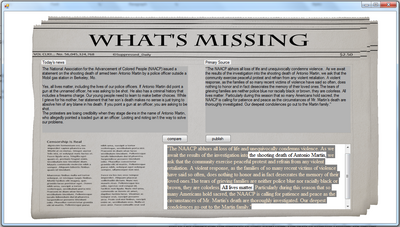

What's Missing

We made a media-analysis tool that takes a primary source and an article and shows you exactly how much of the primary source the article's author failed to include.

It finds the greatest common subsequences between the texts by creating a hash table with the location of all the words in the primary source. It uses this hash to guess where all the possible sub-sequences might start and then it iterates forward and compares how much the sequences have in common with each-other using a scoring mechanism that takes into account how long the words are and how different (we used something called the Levenshtein distance to give each word a rating.) It has a few warts and looks like something we came up with in a weekend, but it runs fast and works exactly like we thought it would. We even caught some biased reporting - look at our screenshot!

Team members: Bradley Lacombe, Will Mosher, Julya Wacha

![Haut de page [T]](/lib/tpl/khum1//images/top.png)